As a prerequisite, I want to say that I'm a very big fan of serverless technologies and particularly AWS Lambda. This service has a loyal price (free for the first million requests per month, $0.20 for the million thereafter), it takes care of all the configuration and as a result of hiding config part it's also infinitely scalable. The platform supports multiple programming languages such as C# (.NET Core), Python, Node.js (JavaScript) and Java. The last two are most interesting for me since I worked with them from the very beginning of my development carrier and am continuing doing so to this day. Java is known for its strict typing, OOP paradigm in a first place and easy unit testing setup. On the other hand, you can get out of the door quicker using NodeJS by writing less code for the same outcome. Not to mention very important fact that in a REST world JSON is a first-class citizen when it comes to NodeJS whereas in Java it's mostly produced with a help of third-party libraries. Saying so, both sides have their strengths and weaknesses what makes them ideal candidates for this performance test.

Test goal

The goal of this test is to compare the performance between two

runtimes. In this challenge, I will stick with a bare minimum of any

REST API and show Hello World! message on any

GET request:

{

"message": "Hello World!"

}The implementation

In our case AWS Lambda is used in conjunction with AWS ApiGateway which makes us necessary to follow "lambda proxy integration response" schema:

{

"statusCode": 200,

"headers": {"Content-Type": "application/json"},

"body": "\"message\": \"Hello World!\""

}There're more details about Lambda proxy integration available on Amazon's official developers guide. All source code for this test can be found on Github: NodeJS and Java. Please note, in a case of Java I used Jackson library to convert response object into a JSON which may slow down the performance a bit. Obviously, it's possible to avoid usage of third-party libraries for the case like that by simply hardcoding the JSON string. However, I chose it to be more closer to real scenario considering the fact that Jackson is widely used in production and it's a one of the most efficient JSON converters for Java.

Comparing sequential requests

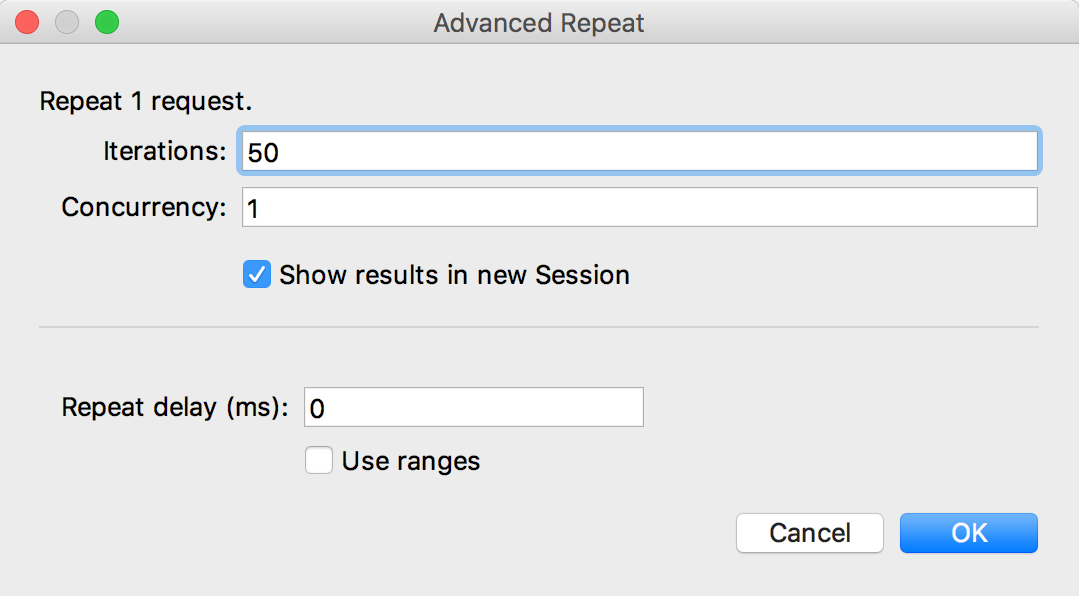

Charles Proxy will be my tool of choice for testing. It's primarily utilized to intercept the outgoing or incoming requests for debugging purposes and there is no straight option to set up this type of measurement out of the box. However, it's possible to execute request using the browser, capture it and repeat countless amount of time with the settings we want to. That's actually what is needed and as an initial step I will configure it in the following way:

As you can see from the screenshot we configured Charles to execute 50 sequential requests. "Concurrency" field is set to 1 which means all request will be sent in order, one by one.

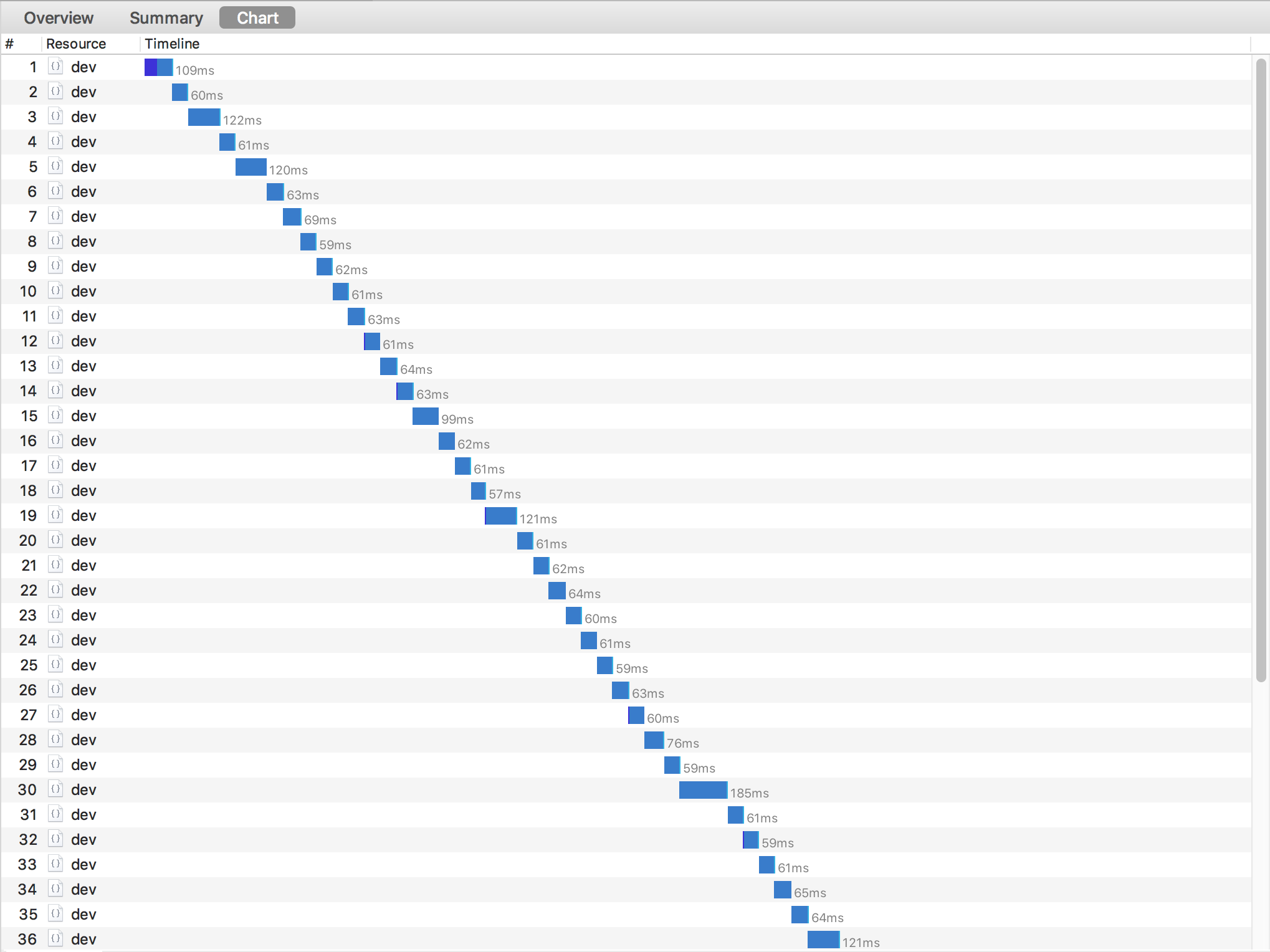

Let's start our test for NodeJS and see how much time will it take to welcome us with the message:

NodeJS spend on average 74ms to say "Hello World", which is actually very fast.

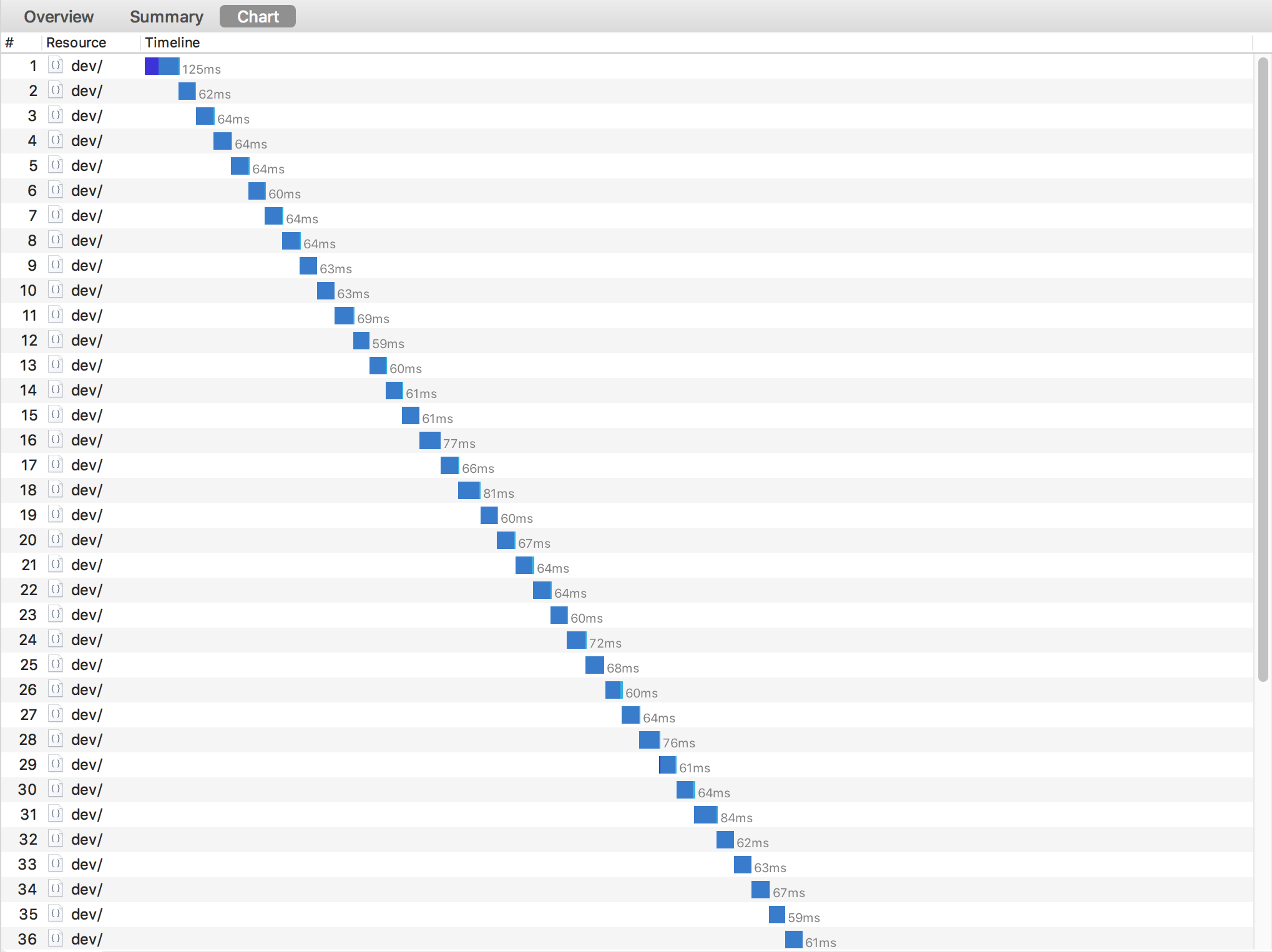

Let's check that for Java:

As might be seen for Java it took a slightly less time with an average of 66ms. Frankly speaking, this is such a small difference that it's not even fair to say that Java is a clear winner considering the fact that testing results may vary from test to test. In my opinion, both platforms showed excellent results and they both clear heroes.

However, there is one problem though. In the case above we executed one request right after another as if our app was used only by one person at a time. Let's imagine a more realistic situation where people order food around meal times which may result in dozens of concurrent requests. Due to serverless architecture on each parallel request, Amazon spins up a "new server" (a technically correct term is AWS Lambda instance) which executes the logic of the application. The process of starting a new AWS Lambda instance is known as a Cold Start and can dramatically delay the response time depending on the number of factors such as amount and efficiency of dependencies, language nature, and others. Let's see the behavior of both candidates in a concurrent scenario.

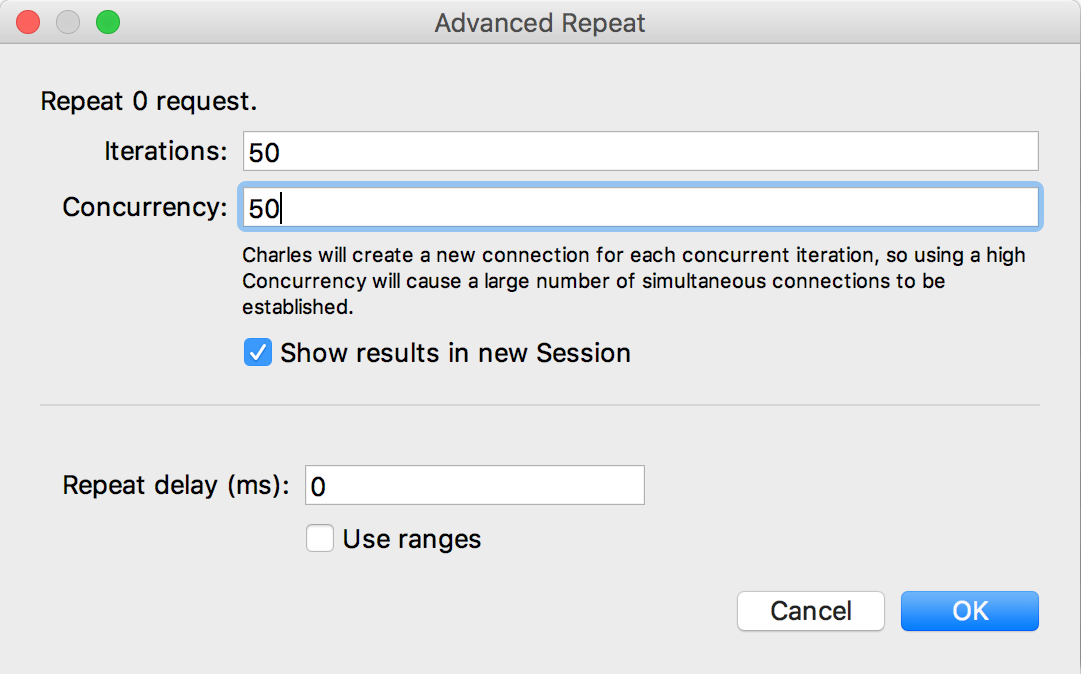

Comparing concurrent requests

For this test, Charles will be configured to execute 50 concurrent requests which mean all of them will go at the same time:

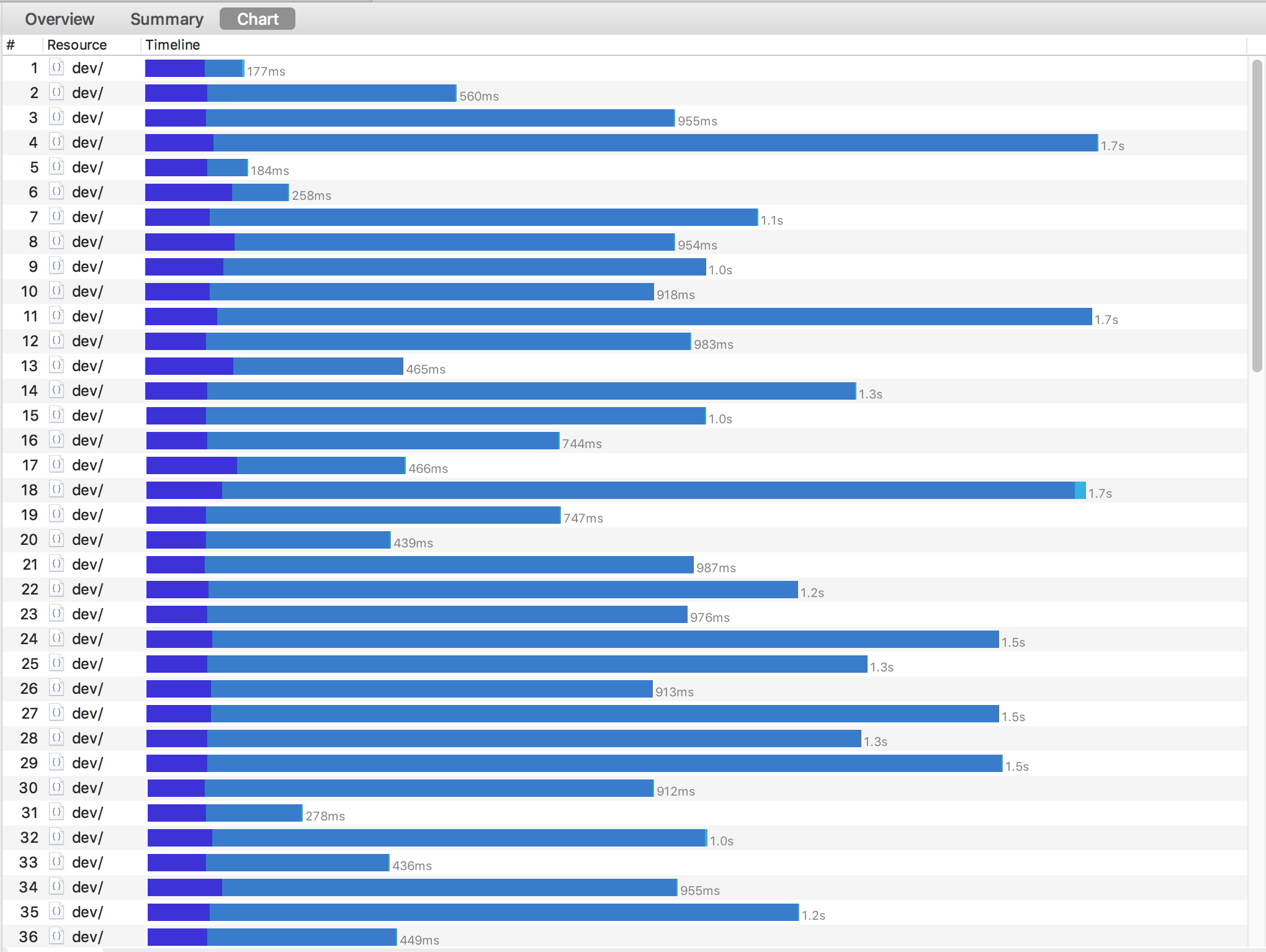

The results for NodeJS:

It is easy to see the results are way different from the sequential processing. There is a longer delay comparing to the previous test. In this particular example, we have high amplitude with the fastest request 177ms, slowest 1.7s, and average 937ms.

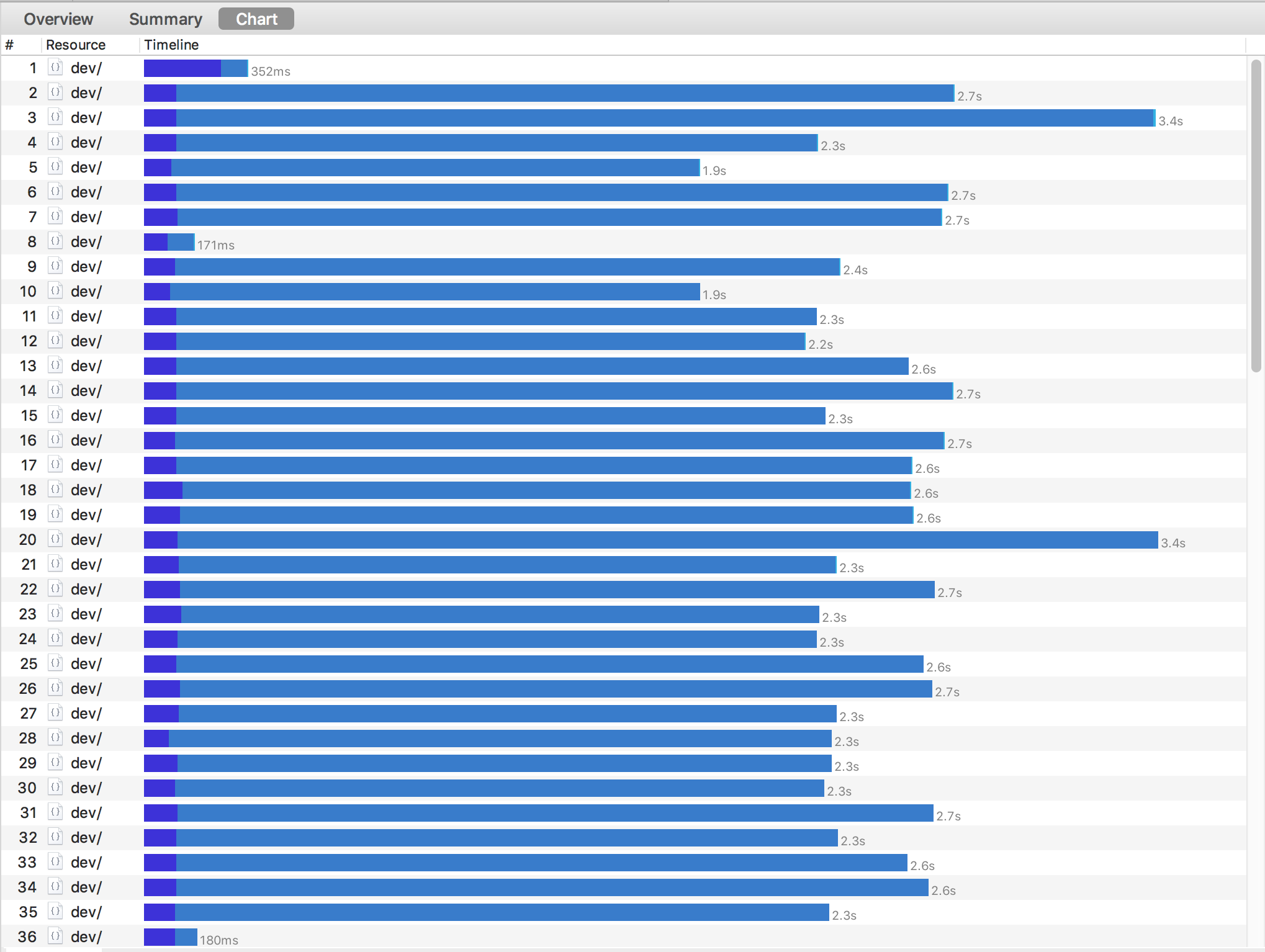

Let's see Java's cold start behavior:

Based on this picture we can state that cold starts in Java are definitely slower than in NodeJS with the fastest processing time of 171ms, slowest 3.4s and average 2.3s.

Summary

| Java | NodeJS | |

|---|---|---|

| Sequential requests avg | 66ms | 74ms |

| Concurrent requests avg | 2.3s | 937ms |

Conclusion

Cold starts certainly affect the speed of the application and it's definitely should be taken into consideration when developing any type of service. Even though NodeJS is a clear winner when it comes to parallel execution, Java still should be considered as a platform of choice to develop serverless application due to its language aspects and large ecosystem. Looking forward, Java 9 can probably reduce AWS Lambda start time because of the features like Oracle JDK’s AOT (Ahead of Time Compilation).

Despite all of that, you can still eliminate the delay for any type of runtime by using "warm up" technique - send a one or multiple ping requests using a trigger (might be another lambda function that executes on schedule) to wake "sleeping" API. In such scenario when user will hit the endpoint the response will be fired up with the minimum timing as during sequential execution. This effect can be also mitigated by increasing Lambda memory size and performing some work for code/dependency optimization in the project.

In the end, our goal, as developers, to make users happy and build a flexible product so that it's easy to add a new feature or change existing functionality. I hope this article gave you some technical insight and might help you to make a architectural choice for the next application.